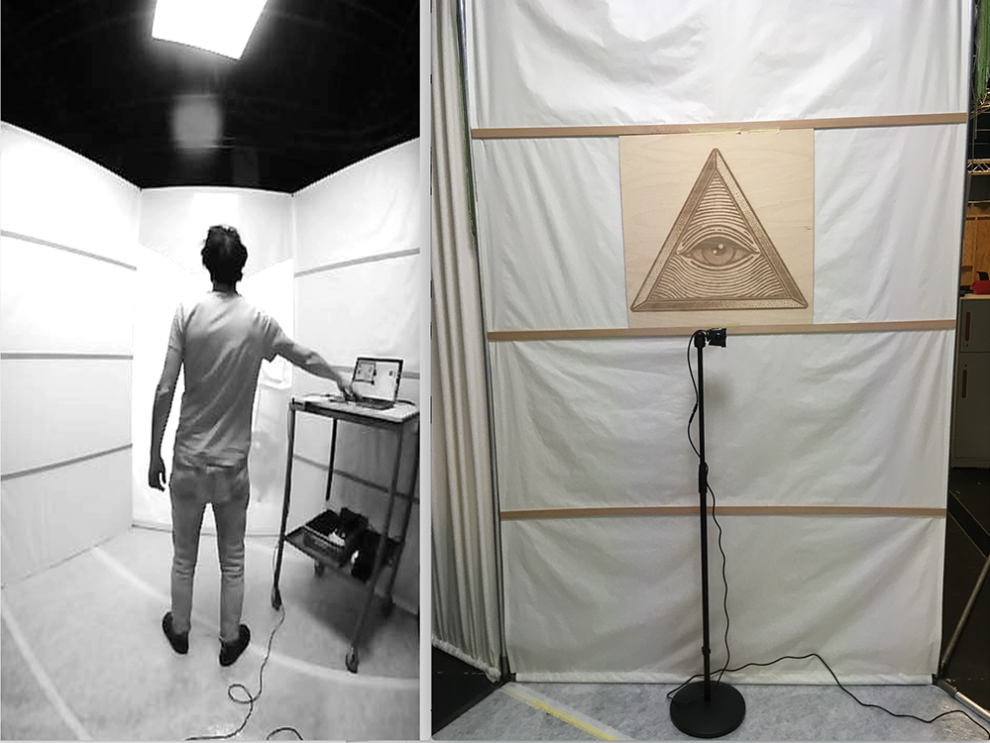

Spatial setup and Sensor Actuator System

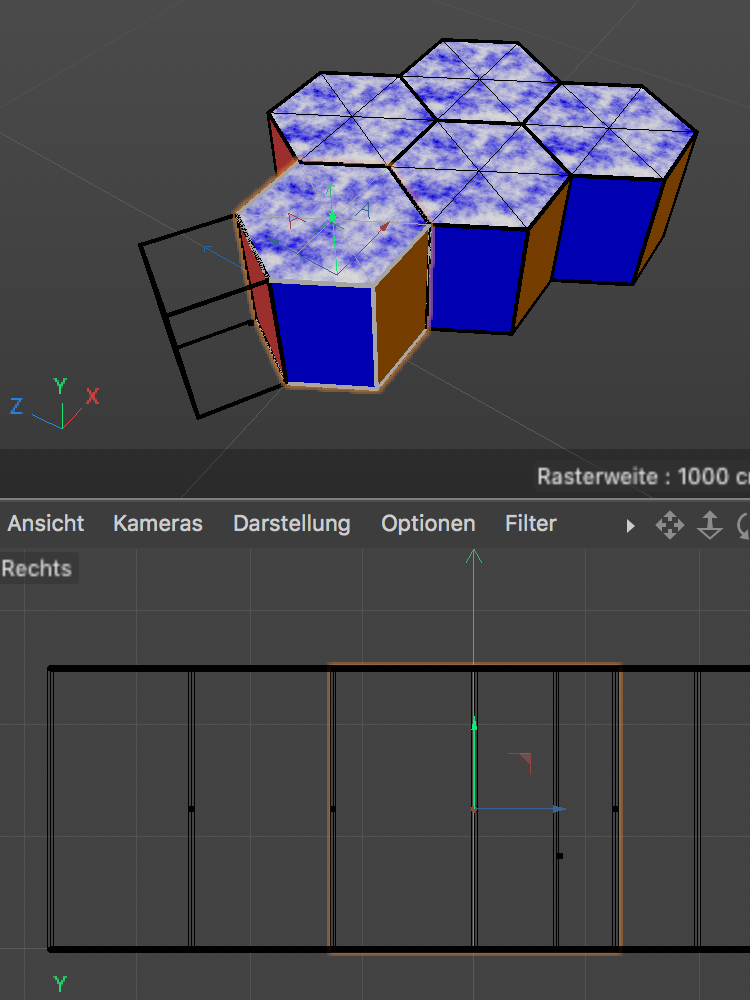

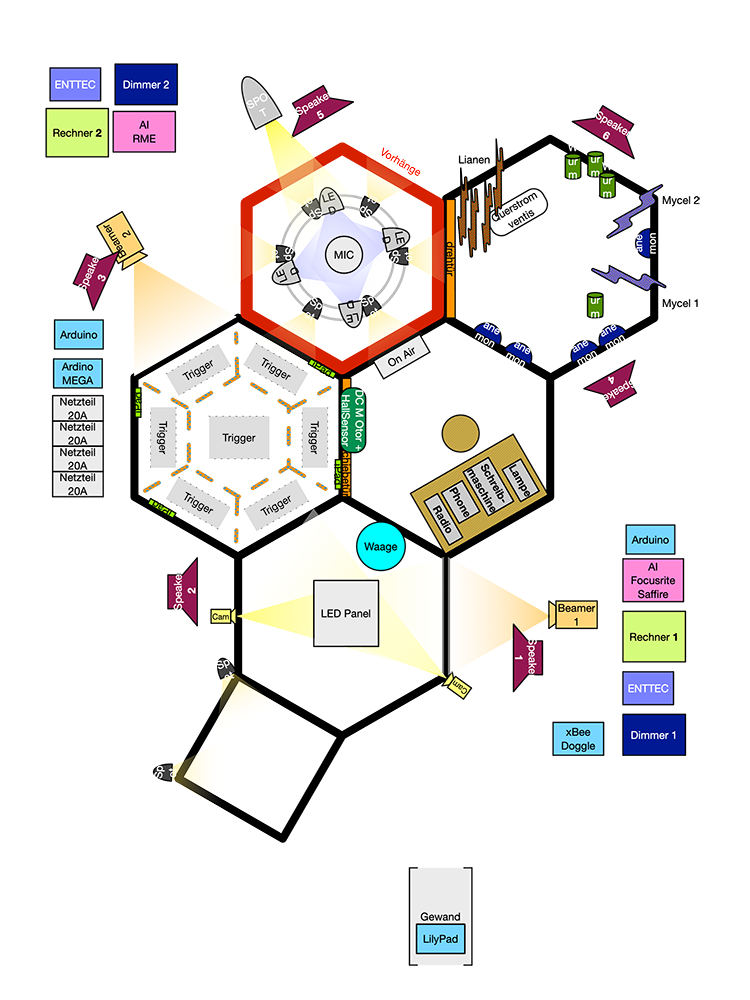

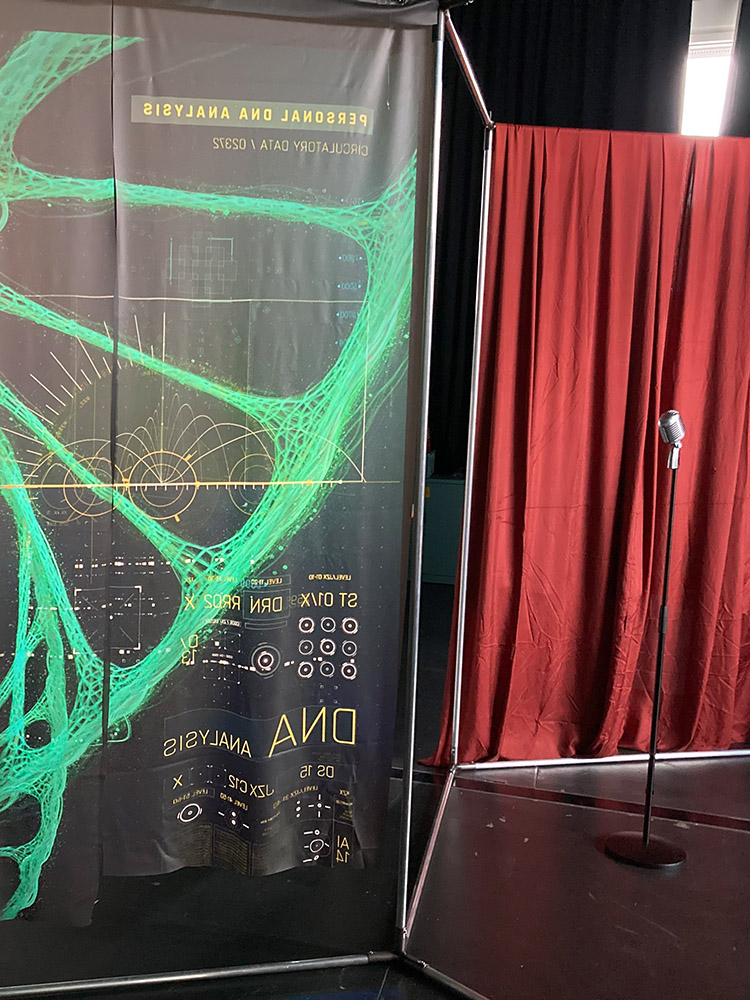

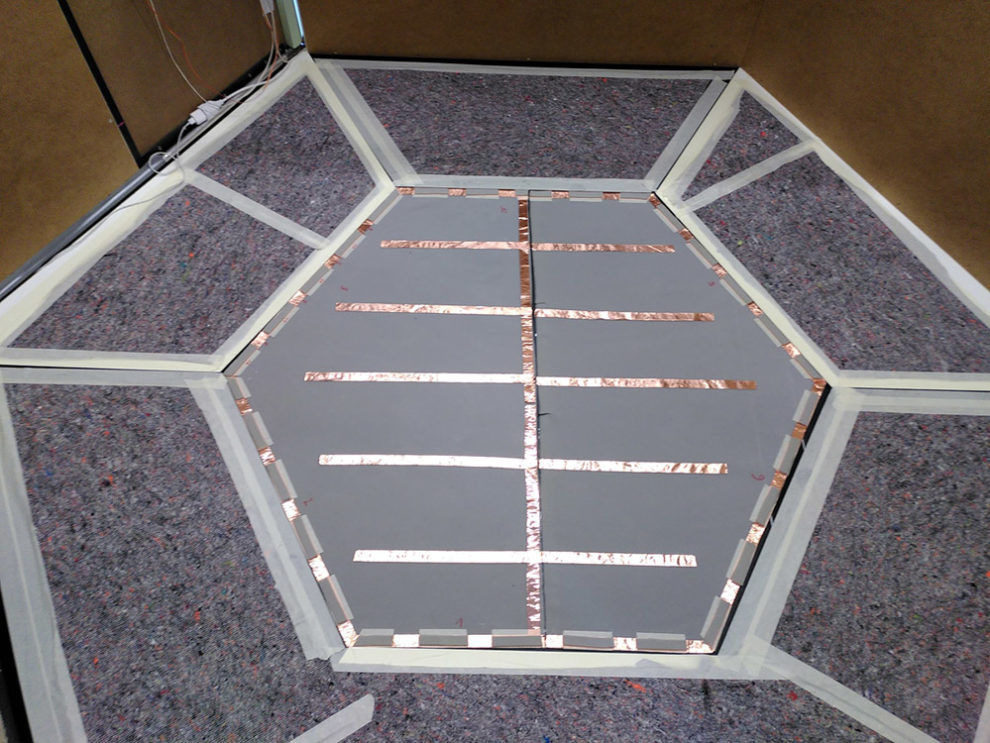

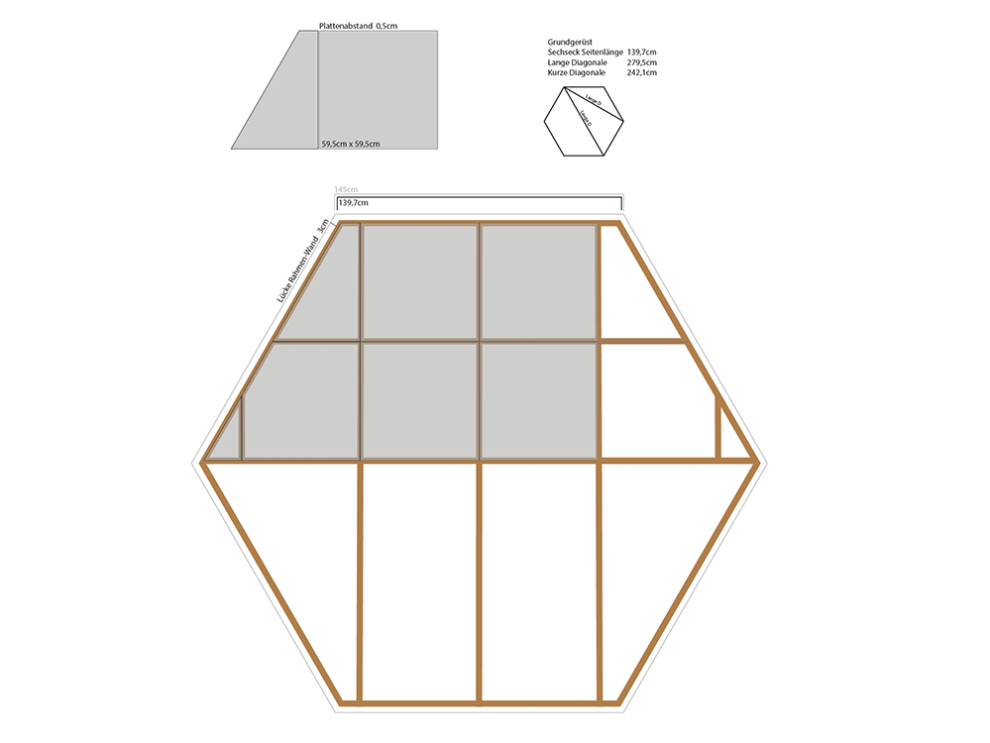

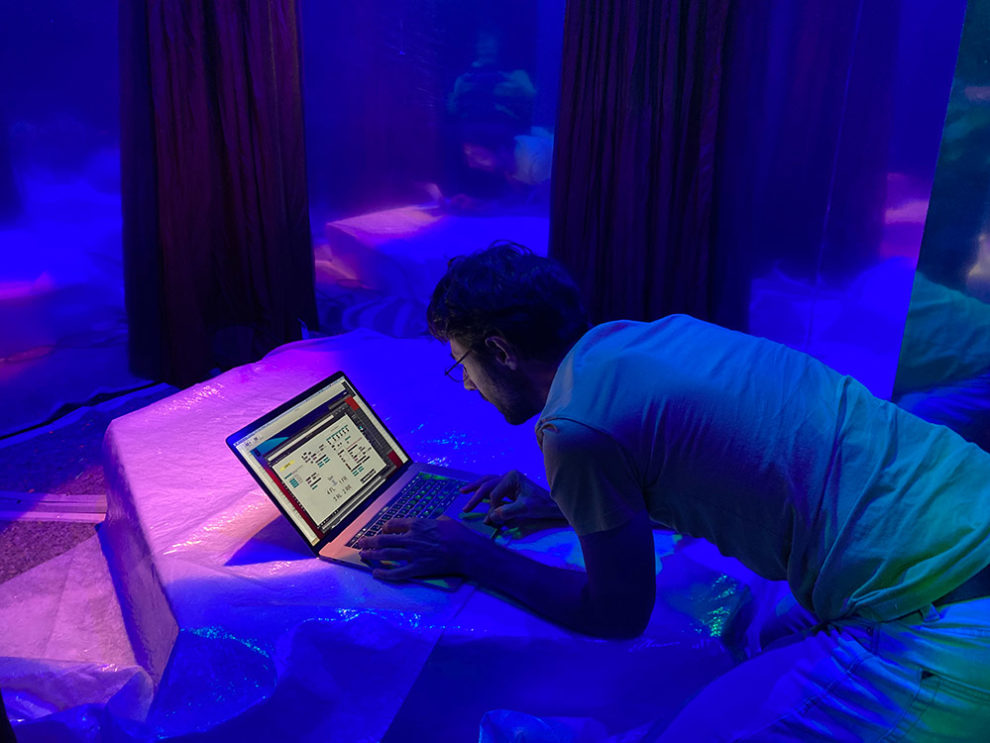

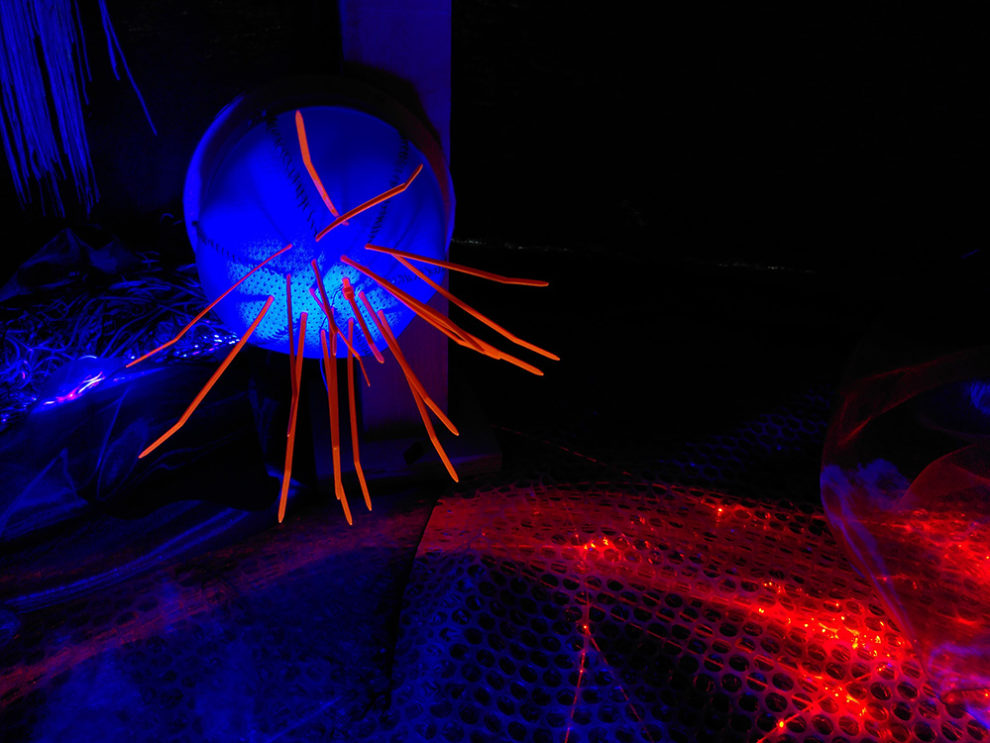

The research facility is built in the form of 5 honeycombs and an anteroom. The basic structure consists of an aluminum-steel framework, which can be assembled modularly and is covered with different materials depending on the requirements. The dimensions are 8.70 meters long, 6.50 meters wide and 2.50 meters high. The installation is divided into an experience area for the test persons (also referred to as subjects) and a backstage area for technical control and evaluation. Based on the conception of content and interaction (see Journey/Design) the «Main Engine» was developed with the visual programming software Max/MSP/Jitter. Visitors are guided through the ubiCombs in a time-based manner and are prompted to interact and to make sense in staged scenes.

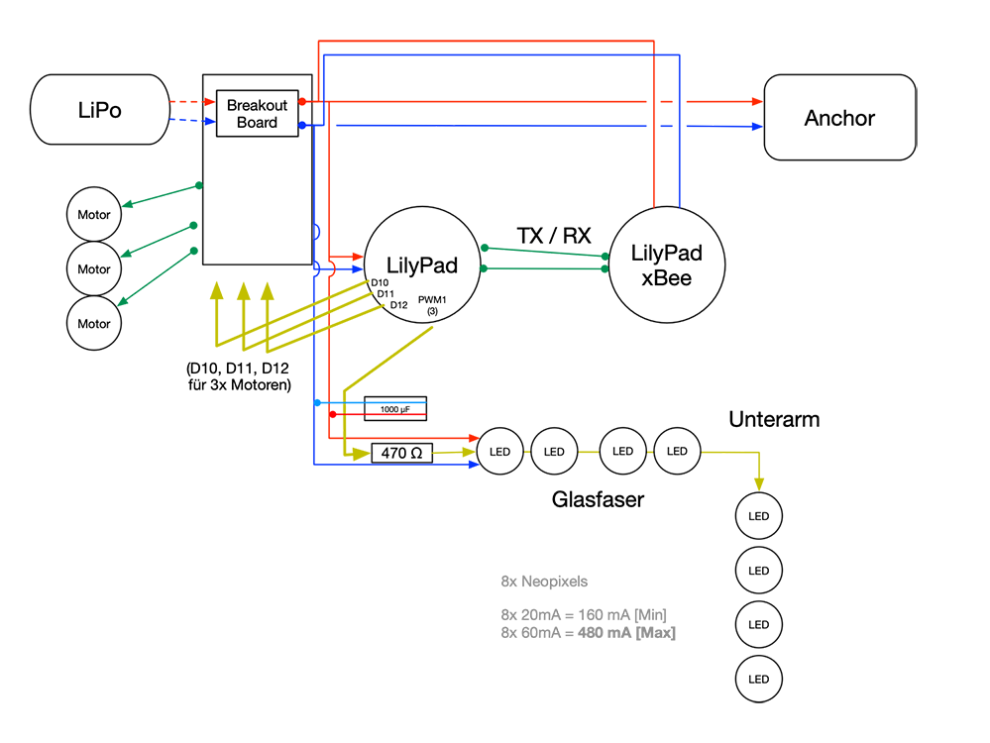

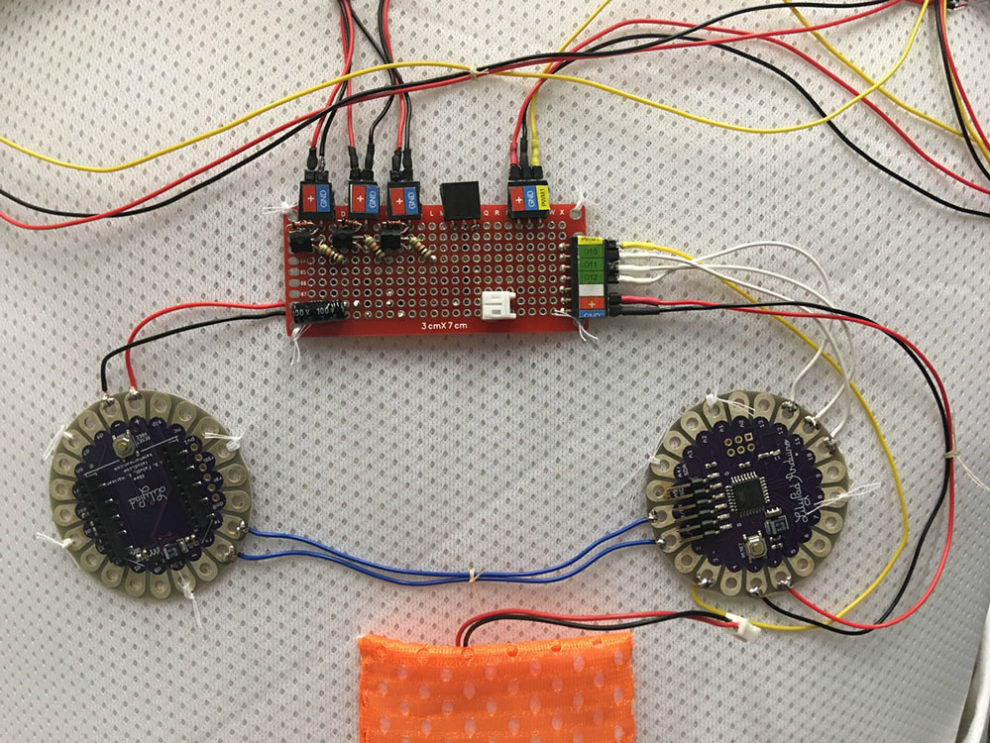

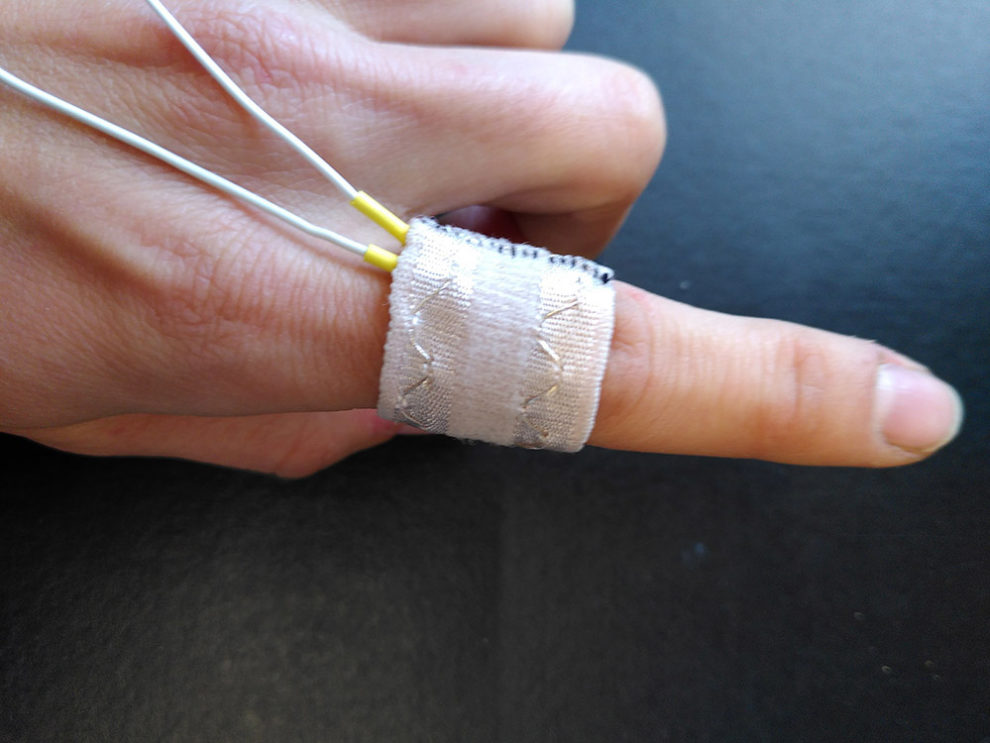

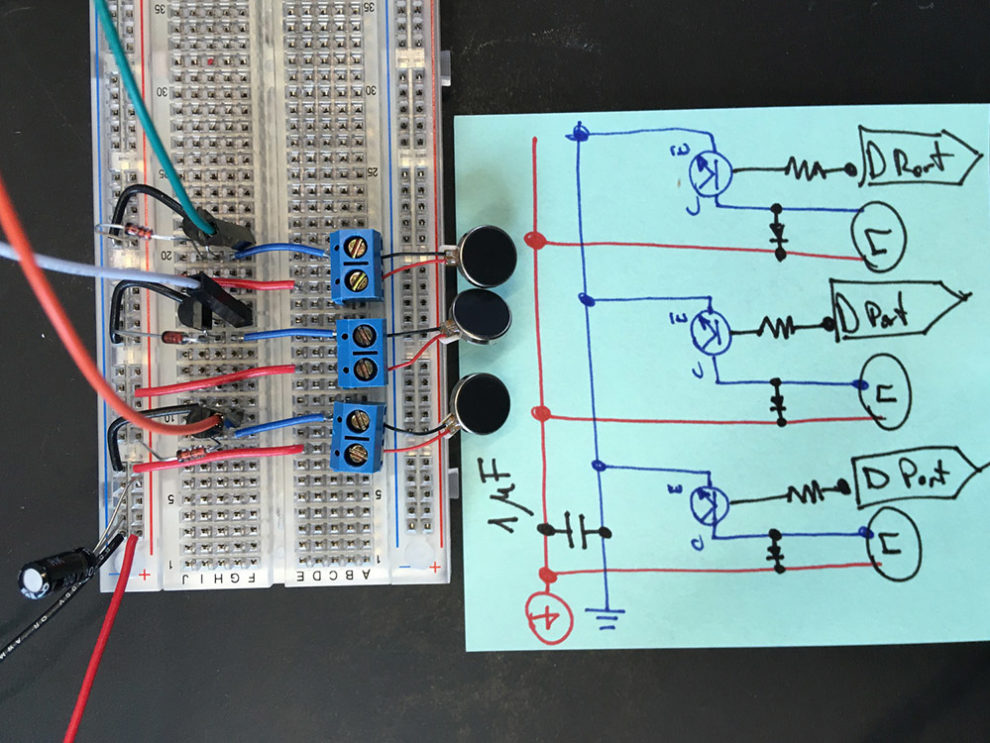

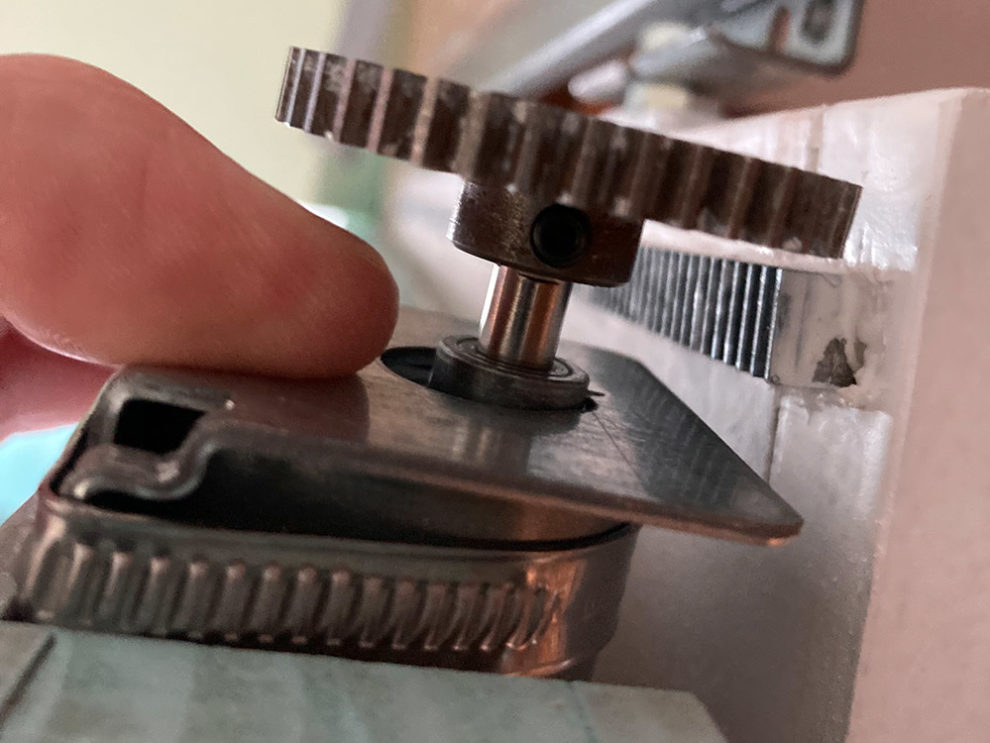

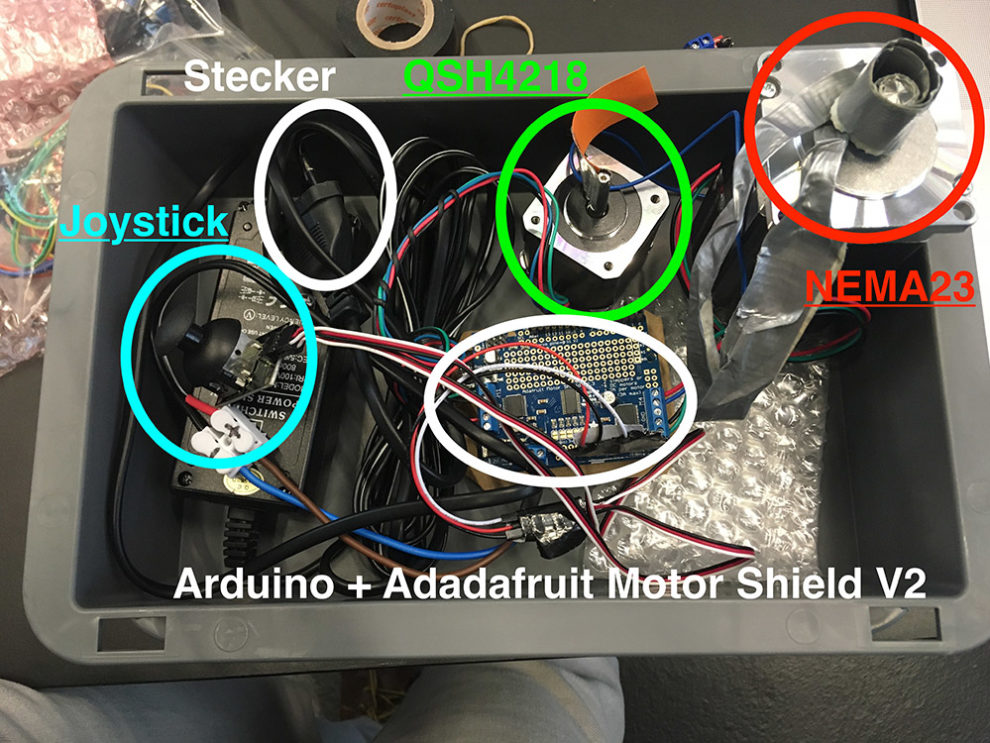

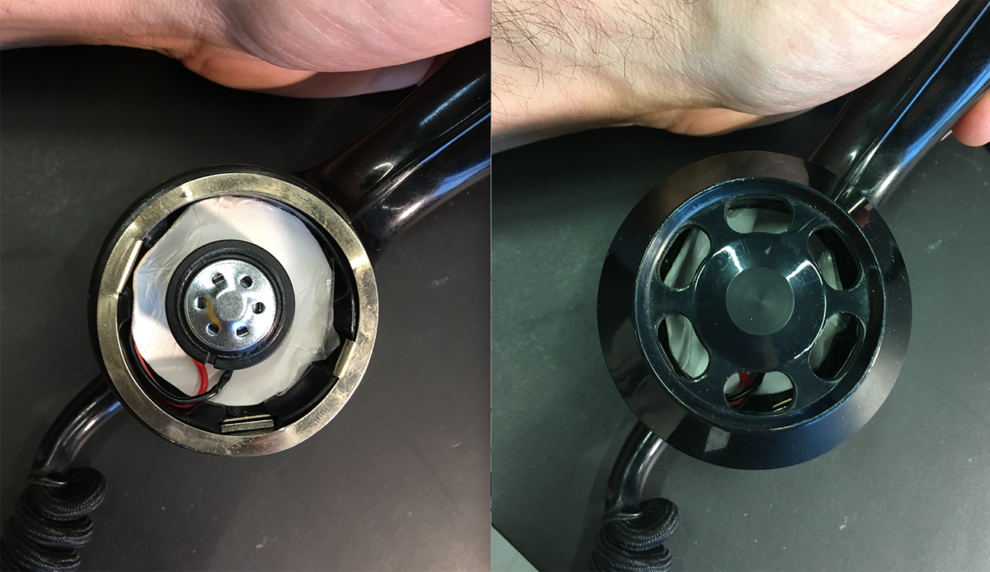

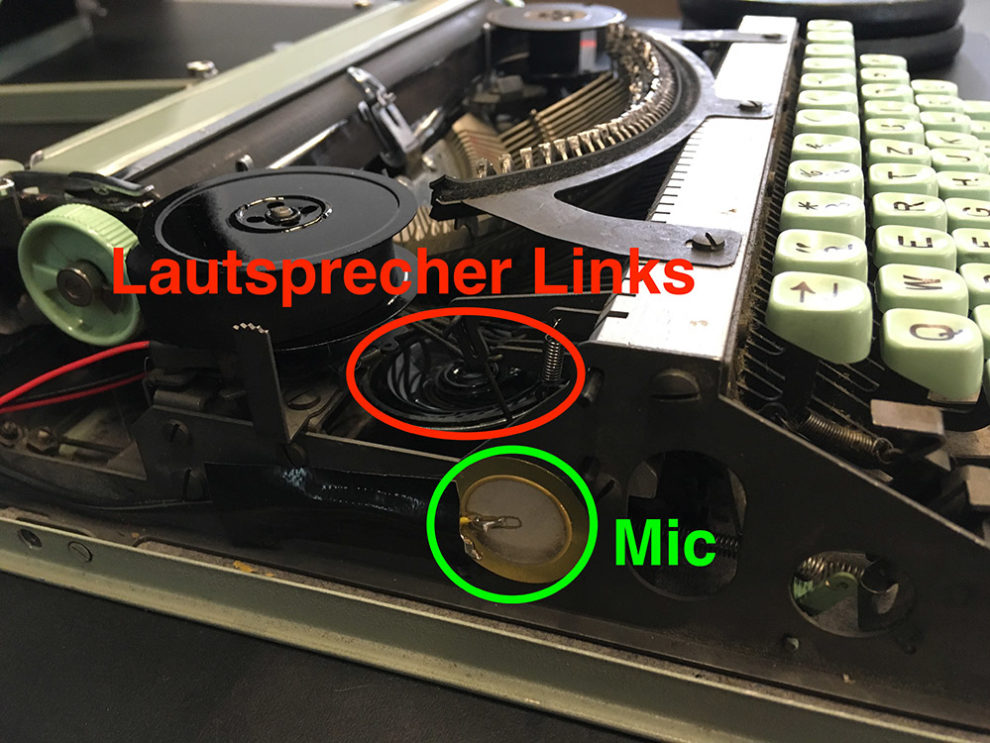

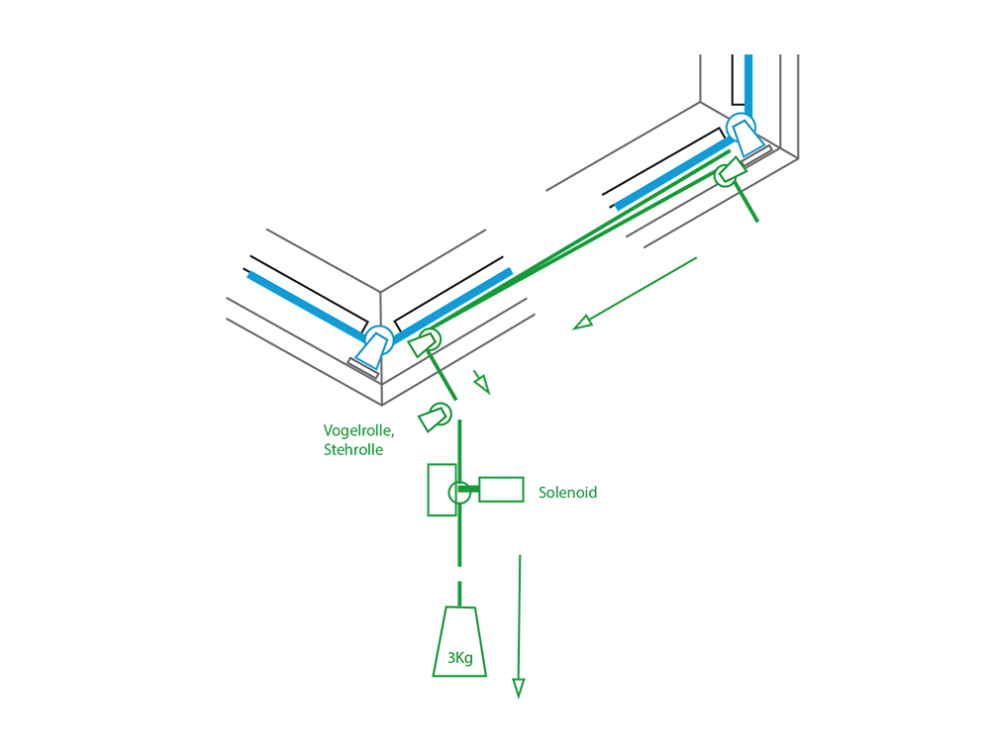

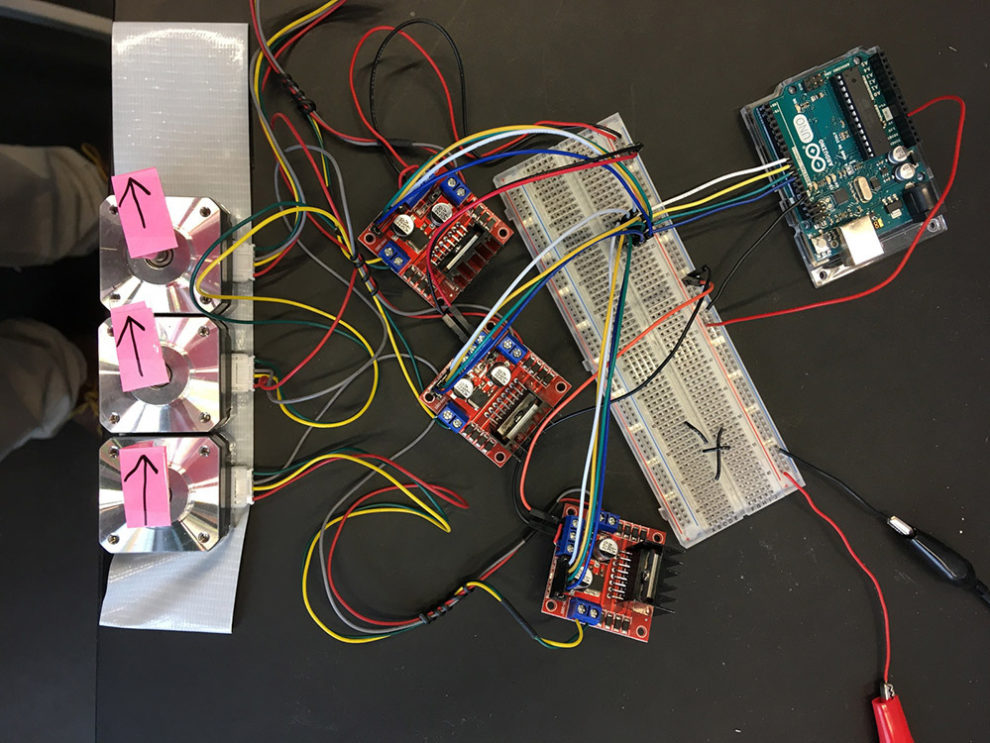

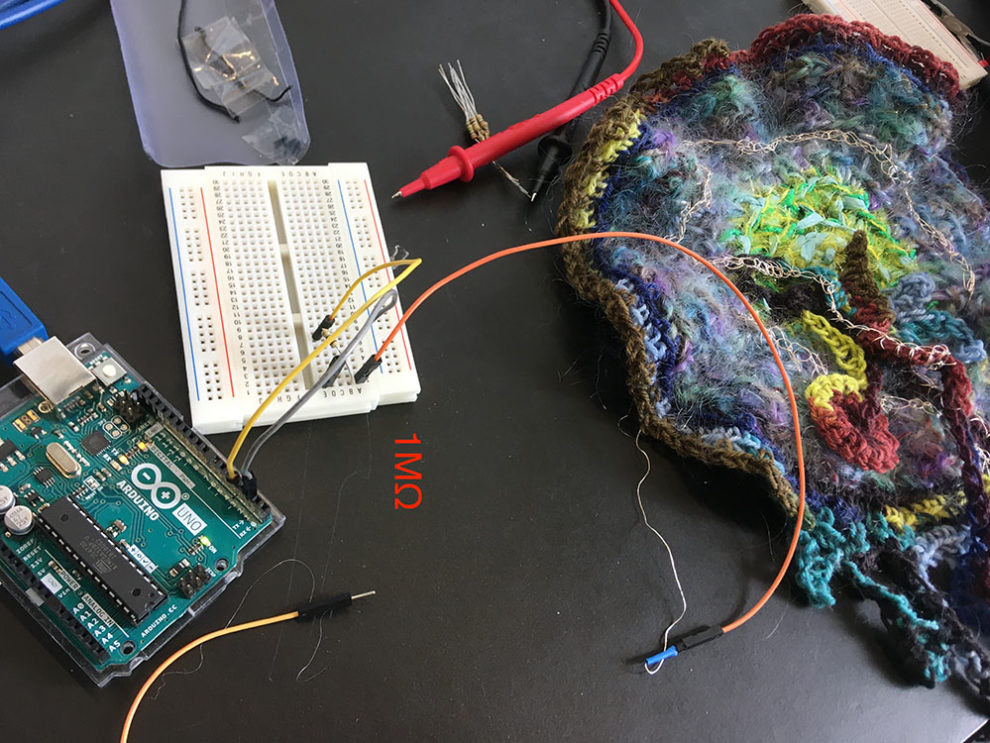

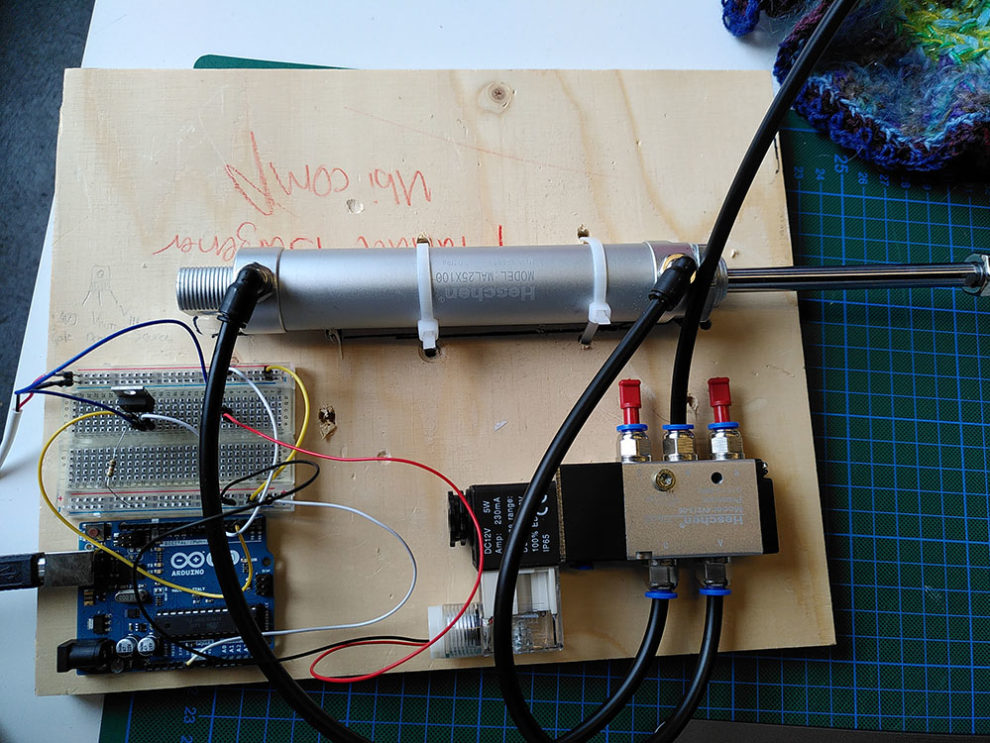

The technical basis for the installation is a sensor actuator system: with the help of various sensor techniques, the behavior of the subjects is recorded, interpreted and forwarded to the responsive environment in near real time. The system reacts to the human actions with physically experienceable actuators such as light, sound or automation. The spatial tracking system Pozyx, mounted in the corners above the installation, allows to capture the position, velocity, head movement and direction of view of the subjects. In addition, various sensors are integrated into the scenes in the ubiCombs (e.g. capacitive, touch, distance) and interpreted according to the situation. Cameras are installed in all ubiCombs to allow video recording and real-time observation of situations not captured by sensors. They offer an additional way of user behavior interpretation through human observation. Using a digital «behavior checklist«, these key moments of behavior are identified, quantified and transferred to the system by hand. Similarly, semantic differentials are used in the subsequent interviews, the quantified data of which can also be transferred to the digital system and thus triangulate qualitative data sets. A Galvanic Skin Response (GSR) sensor in the form of a finger ring is integrated into the garment that the subjects put on for the evaluation. It measures the skin conductivity and thereby psychophysiological reactions of the subjects during the entire run.

In addition to activating the responsive environment, the sensory measurements are also used for evaluation and data analysis. They are stored, categorized and evaluated rule-based or with the help of artificial intelligence. In the Evaluation Viewer, the processed data is visualized and can already be viewed during the tour and used for the subsequent interview.